Some footage of live screenshots I’ve taken while developing (and playing with) (( OLoS )) .

sign up to join the beta at olos.cc

Just making some noise with OLoS. Inspired by Tone.js, an oscillator can now act as a clock.

When people look at their phones during an event, that’s generally an indication of boredom. And when people bury their heads into screens on the subway, it’s an escape from the public space, a way to avoid making eye contact with strangers.

I do these things all the time. I know it’s rude. But it’s becoming a social norm as we come to terms with the myriad ways our devices augment our reality.

What if our devices could enhance, rather than diminish, our sense of presence? What if you could connect with people around you through shared participation in an augmented reality?

I’m working with Jia to develop an immersive audio-visual experience using smart phones, bluetooth, and possibly Google Cardboard. It’s a creative exploration of interactions mediated by technology.

We’re honing in on a couple variations on this concept, which Jia outlined here.

One idea is that every participant will emit a frequency that fellow participants can hear and modulate. The “carrier frequency” can have multiple parameters, like amplitude, detune, filter frequency, delay time, and delay feedback. The amount of modulation is dependent on distance between the modulator and carrier. As participants get closer, they’ll modulate each others frequencies with greater intensity. Some of the frequencies might be slow enough that they’ll create a rhythm, while others will be faster to create a sort of drone.

Here’s a sketch of what this might be like using one phone and some Estimotes:

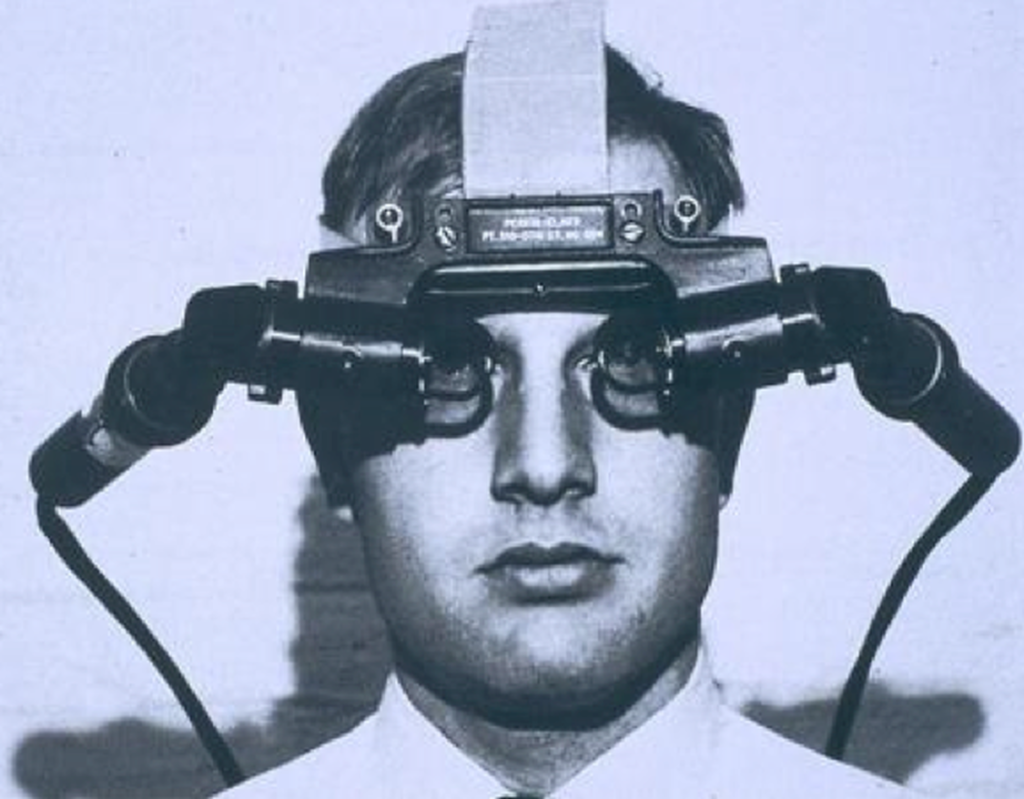

The frequencies can be mapped to visuals as well. The participants would wear their phones over their eyes with Cardboard, and they’ll see a slightly augmented version of what’s in front of them.

Initially we’d hoped to use three or four iBeacons to triangulate every participant’s location in the room. If the phone is on your head, we could use the compass to figure out which direction participants are facing. From there, we could associate each frequency with specific coordinates in the room, and run these through the web audio api’s Head-Related Transfer Function to create a 3D/binaural audio effect. That’s probably out of the scope of this project, but it would be pretty cool!

Inspiration:

I was inspired by “Drops,” one of several IRCAM CoSiMa projects, which was also performed at the Web Audio Conference

Janet Cardiff’s Walks, with binaural audio

Audience participation in performances by Dan Deacon and Plastikman tho that’s not quite what we’re going for.

Golan Levin’s Informal Catalog of Mobile Phone Performance – lotsa good inspiration here.

–> slides <–

Where I’m At is a sonic graffiti app for iPhone and Android.

The main user interface consists of a record button and a map.

When you open the app, or hit the refresh icon, a bunch of things happen: It uses the geolocation API to fetch your location. Then it sends your latitude/longitude to the Foursquare API to locations near where you are at. It changes the location listed below the record button to the location nearest you. When you click on the name, you’ll see that the app repopulates the list of available locations. It also uses your lat/long to center the map, which uses the Google Maps API. And it gets the most recent data from my database, populating the map with pins representing sonic graffiti tags.

When there are multiple tags at a location, only the most recent tag is accessible through the map. It would be interesting to also provide access to the history of sounds, and find a way of representing the date at which the sounds were recording (which I am storing in the database).

It’s currently anonymous so there is no login. It would be interesting to allow sign in, so that you could follow friends and hear their tags. For now that’s not necessary because I’m already friends with all the current users, and there are only a few of us.

I set up a LAMP server on Digital Ocean following these directions. From there, I was able to use PHP scripts to handle file uploads, and to save JSON-structured data to a text file.

USER RESEARCH / FUTURE PLANS

One of my major questions is, how would people use audio? So far that’s still a bit unclear, people are shy when using their voice in a way that they aren’t when using photo and/or text apps. Some want to leave audio tips about locations, others want to leave notes for their friends.

I’ve found that the 10 second restriction is cool once people get the hang of it, but some wanted to make longer recordings. Should I allow for this, or keep the limitation? Maybe sounds should be limited to 16 seconds or something just a bit longer.

I like how simple the interface is, because the recordings people make are raw, they have to be their own editor, all they have is a button to start and when they let go the recording is done. But people sometimes want to make a longer recording and then trim them down after, similar to Instagram. In the process, they could even add effects, samples, and/or mix/stitch multiple recordings together to create their audio graffiti. I think I could do it with something like EZ-Audio and an NSNotification (for ios at least). This has the potential to become a rabbit hole, especially because right now I’m not doing anything to access the audio buffer, just using the Media plugin which has very limited functionality.

Users were confused about whether the map shows locations that you can select in order to tag them, or whether it shows existing tags near you. Currently, it’s the latter, but this would be clearer if the pins were more than just red dots – they could show the name of the location and an audio icon or date of the recording relative to the current time.

The map should show your current location, and update when you change locations or re-open the app.

The app needs a more cohesive visual aesthetic. During class, I got some feedback encouraging me to go for more of a “graffiti” aesthetic.

I’d like to analyze the sounds that are recorded and provide some data about them. For example, I could send audio to the Echo Nest to detect what song is playing, and detect some data about the recording such as loudness. I’d like to offer sonic profiles of spaces so that the app becomes usable even without listening—it’s not always convenient to wear headphones or hold the phone up to your ear. let alone allow some piece of unvetted sonic graffiti to blast out of your speaker in public.

If somebody leaves a tag at Fresh & Co saying “the tuna sandwich is fine now” in response to somebody else’s tag, it should maintain that thread. I’m not sure whether tags should allow the user to enter optional keywords, or I could have the ability to make a tag in response to another tag to create a discussion, similar to twitter.

In the meantime, Kyle tipped me off to an ITP thesis project from 2012 that has some similarities: Dig.It by Amelia Hancock.

Where I’m At is a sonic graffiti app that tags locations with audio. I’ve been working on it, and I’m currently getting nearby locations with Foursquare, saving audio files to my remote server.

Every location has only the most recent audio file associated with it, so there is some competition to tag the place with your sound. Like if you’re a graffiti artist and your work gets painted over. Maybe there can be a way to access history, but it will be an advanced feature.

To do list:

- Ability to pull in audiofiles from the server, and data from the database (so far I’m just posting to it).

- Visualize the nearby locations on a map with pins representing recent audio.

- User logins (optional) will get contacts and create a list of friends who you can follow, in addition to browsing by what’s around you.

- Send push notification when you’re nearby a sound somebody has left.

- Hold up phone to your ear to hear a random recording, walkie talkie style, of a sound from nearby. Like WeChat or ChitChat. So when you get a push notification you can listen privately without plugging in headphones, or broadcast it.

- Recognize if music is playing and/or tag songs via Spotify API… I wonder if I should focus more about tagging/listening to recorded music, less on recording/sharing audio?

- What should the visual language be for an audio app?

- How do I find the closest sounds for any given location? In the long run, I would need a better data structure, because I’d want to be able quickly search just for the locations that are nearby, and prepare their corresponding audio.

- How can I visualize the frequency spectrum as audio is being recording/played back? The Media API doesn’t provide access but I’d like to figure this out.

More importantly, how would people want to use this? I’m still researching location-based audio, and I’d like to focus on one aspect that will help me make decisions about what to do next, how to prioritize features, the visual design etc.

1. This could be for sharing audio recordings with strangers and/or friends. I’ve been looking at Chit Chat for inspiration.

2. This could be for sharing location-based music – it tags music that’s playing like Shazam or lets you seach for a song and share it with your location. Example: Soundtracking.

3. If this was an app for creating and publishing site-specific Audio Tours, I could do both those things, and might have a clearer picture of who would use this. There would be tour guides, and tourists. It could allow users to trigger songs, or audio recordings, and might also need to orient based on the compass. There are already some apps for this, like toursphere, locacious and locatify.

Homework assignment #2 from Ken Perlin’s computer graphics class, source code here.