Title

Title

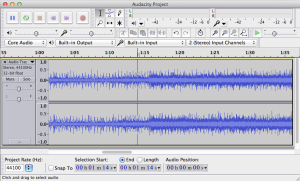

Web Audio Editor: A simple audio editor that works in the browser, designed for kids age 7+ (URL // source code)

Description

Much of the Web Audio Editor’s functionality comes from SoX, a command line utility that has been around for over 2 decades. Shawn Van Every introduced me to SoX in CommLab Web, and helped me set it up on my server so that I can call it with PHP. The frontend is built off of Wavesurfer, a JavaScript library that visualizes the waveform in a JavaScript Canvas. I also added a Canvas to visualize the frequency spectrum, inspired by ICM and Marius Watz’ Sound as Data workshop. I am continuing to develop this project and would be thrilled to present it at the ITP show.