codeofmusic

Hookpad is a sketch pad for musical composition that focuses on two primary elements: Chords and Melody.

Even with this focus, it’s an ambitious task, because there are many secondary elements that come into play for musical composition. Hookpad toes the line between providing important features, and constraints that help guide the user towards creative composition. There is an overarching music theory component because it’s a product of Hooktheory and as such it is a very useful educational tool. But sometimes this connection to traditional notation feels constraining—it limits the potential for exploration.

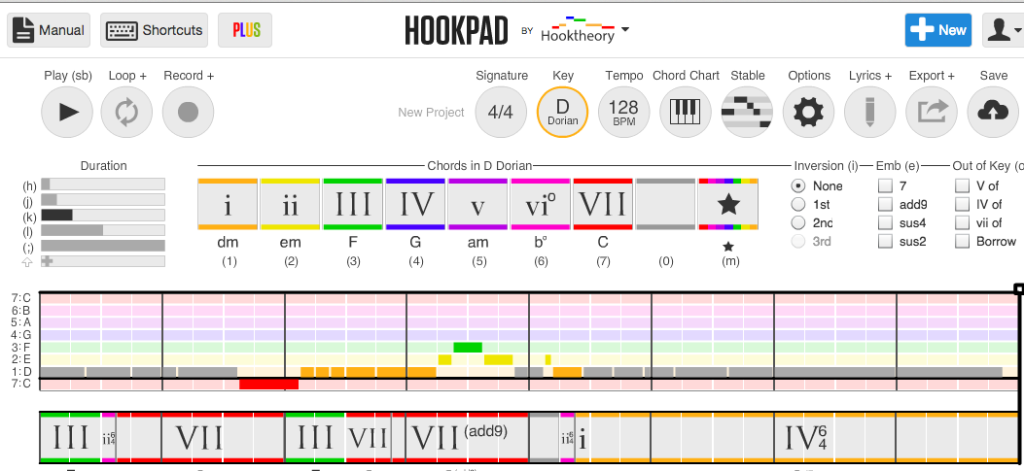

It was not immediately clear where to begin with Hookpad. Hookpad has two separate timelines, one for Chords and one for Melody, but these are both towards the bottom of the page. At the top, there is a bar of global settings for the song such as signature, key, and tempo. Below that, there are settings to set the duration of the next note of a melody, and a whole slew of settings for the chord. Finally, there are the Chord and Melody timelines. All of these areas are related and useful, but I think they can be overwhelming.

Many of the settings serve to enforce the user’s knowledge of music theory. But to a novice, or to someone with a passing knowledge, they can be distancing. I have taken theory, but I’m more used to recording myself playing an instrument, and/or using DAW’s like Ableton, Logic or Fruity Loops to sketch out my compositions.

So initially I saw Hookpad as an alternative to the MIDI Piano Roll that just happens to emphasize theory. My instinct was to explore my scales and improvise new compositions using the ASCII keyboard as if it were a MIDI instrument.

Hookpad has a slew of shortcuts mapped onto the keyboard that could be very powerful, but I find them distracting from a more intuitive form of composition—improvisation. In fact, the shortcuts load on their own separate page that requires navigation away from the current page. Through keymappings, we can toggle through the notes and chords within a scale by pressing the up and down arrows while placing the chord or melody timeline is selected. More importantly, the shortcut keymap allows the user to place different chords/melody on the timeline by pressing the numbers 0 thru 7. But chords/notes can only be triggered via ASCII some of the time.

The shortcuts allow you to set duration of your note placing tool. Initially i found this to be an awkward way to compose. While users can trigger chords and individual notes of a potential melody, when it is initially loaded, Hookpad affords no improvisatory mode.

The ability to change the key’s root and mode is fun, especially if you already have an idea of what modes like Mixolydian mean from some music theory training. It would be useful to hear the mode playback when it is selected. Or, one might think they could play the scale back with keymapped shortcuts.

So the first thing a user might do would be to choose a key and mode, and explore what it sounds like by pressing the keys 0-7. But in the default mode, by doing so they will be writing a composition onto the piano roll. Obviously this can be deleted. But it is an awkward introduction to the compositional process. It doesn’t allow any time to get to know the key/mode, or to play with rhythm because every note starts with a default rhythm. Changing the duration requires hitting a separate button before placing a note in its position on a sequencer or pressing a key 0-7. This is an awkward way to program a step sequencer, and counterintuitive to the way that musical instruments are played. Instruments control duration by triggering a sound and then, typically, stopping it—controlling its duration in time in real time.

Creating an improvisatory mode would make for a much more exciting compositional process. This way, I could use my ears to explore the rules of music theory and compose, allowing the underlying theory to reveal itself naturally.

Instead, there is Record mode. Record mode is misleading. It is limited for non-PLUS users.There are also problems with it. For example, on Chrome, every sample clips on the attack while I am recording. On playback, my “recording” doesn’t clip (thankfully). But that’s not the only difference I hear when listening back to my transcription… The rhythm of my chord progression is not as I remembered playing during my performance. It is both familiar (generic) and unfamiliar (as in, did I play this?). It has taken on a rhythmic emphasis that I did not intend. In between the attack/decay of my ASCII keyboard performance, it has imposed a new rhythm on the chord progression.

I’d like to think that there is some reason for imposing additional rhythmic details onto my recorded improvisation. Maybe the goal is that I should learn to obtain the rhythmic emphasis I originally intended (and have since forgotten, because I was improvising) by getting meticulous with how I might notate that rhythm. But Hookpad’s interface doesn’t make it easy to notate/program natural rhythms for the chord progression, even if I did remember. I’ll use strumming as an example (even though Hookpad’s guitar can’t actually make any sound—it is only used for showing you how to finger the chords that are played back on piano). If I make a single chord last a full bar, I’d expect one strum to last a whole note. But, it adds additional strums. For more detailed strumming patterns, it’s hard to drag/subdivide that chord into the exact rhythm I want because I can’t make it sustain, and I can’t remember the shortcut to copy or change the duration. I think hand notation might be easier—I’d just have to draw a familiar shape to represent what I want.

Hookpad implements rests in a way that makes a lot of sense given its roots in Hooktheory. It is impossible to get from one point to another without something in between, whether it is notes or rests, there must be some notation. However, for those who are used to the midi piano roll or step sequencers where blank space between notes are treated as rests, this may be confusing. Perhaps because of this, it is impossible to drag notes from one position to another. Instead, you have to get there with something, even if that something is a rest, rests can be the most important part of a composition, and in Hookpad, you really have to think about them. (Alternately, from my experience and what I saw in class, you can still ignore them and just make really intense music with no space)

Initially, the chord and melody rolls only go up to 7. There is no resolution of the octave. This is a frustration I have with many interfaces. It was not immediately obvious that more notes were available by hitting up/down while a note is selected to eventually traverse the octave threshold. But, at least it is possible. After extending the octave, the keymappings are still limited, and notes must be moved out of the octave by selecting them and pressing the up or down arrows. It would be much easier to drag. It would also be useful to be able to set the octave of a performance rather than have to raise/lower each note after by pressing the arrow keys. Though I wish it could change octave, I understand why as a music theory app, Hookpad does not allow us to change the range of the mappings of 0 (rest) to 7 (7th note in the scale).

I tried to create a composition:

I ran into some hurdles while composing. The biggest hurdle is that I have can only use record mode 2 or 3 times (unless I upgrade to PRO). Copying and pasting are really important (repetition is key!). Hookpad’s copy/paste implementation required making some decisions about what happens when you try to paste over a preexisting space—for now, it will simply not paste, rather than replace or displace the existing block. But I can’t drag to select chords, only melody. Similarly, can’t copy the melody as an entire block the way you can with chords. Both methods have affordances that would serve each other in any compositional process that incorporates repetition.

Hookpad can tie into YouTube and knows chords to many popular songs, which is definitely going to be appealing for many, though is not as fun for me as improvisation. However, with this database, it has developed a model of the Magic Chord. It can suggest a chord that you might want to play next, based on its analysis of lots of popular songs, and give an example of a song that used that transition with a link to hear it on YouTube. This is really cool in theory. However, use of the magic chord is limited without PRO mode, and I suspect that it is also something that would require a lot of calibration if users wanted to step outside of familiar modes/progressions and into more experimental territory.

Hookpad is a promising interface for composing within a set of constraints rooted in music theory, and exploring the rules of theory through creative exploration. It could be more welcoming to novices and more closely connected to the compositional process if it placed a greater emphasis on live improvisation/performance as the default mode, rather than blocking record mode as a premium feature.

I’m really inspired by the Web Audio API. Anybody on a desktop web browser can just open up their JavaScript console, give themselves some oscillators, and make a modular synth.

I’m also inspired by the web’s potential as an educational resource where you can learn by remixing other people’s work. For example, kids are learning how to code by remixing Scratch projects.

There have been attempts to create a standardized platform to share Web Audio code modules. For example, component.fm—it’s really cool! Similarly, there are application-specific sites like sccode, for people interested in sharing SuperCollider code. There are also sites that let people make music by adding a visual interface or live coding environment to the web audio api. For example, Web Audio Playground, Wavepot, Gibber. And then there are sites that just let people make music in a browser, like AudioTool and Soundation.

I want to make a website where people can not only share/remix each other’s modules through code, but also make music with them. The site’s temporary title/url is WAAM.cc or WAMod.cc. It would serve two general audiences: people who code, and people who make music. Sometimes, these audiences overlap.

The site allows users to store and browse at least two types of data objects:

- Musical patterns – kind of like MIDI scores, a series of note on/off, velocity, with multiple voices/channels

- patterns have a BPM, beat-length, tatum-length, loop on/off

- patterns can include multiple voices

- patterns can be programmed “live” using MIDI or ASCII. Patterns can also be programmed via a visual interface. Patterns will display in a visual interface.

- Synth instruments – the voices used to play back musical patterns

- synths output digital audio

- synths standardized constructor and methods like

- triggerAttack

- triggerRelease

- synths have “public” variables that can be modified through a visual interface, or mapped to MIDI input

- synths have authors, and contributors

Following up on the melody sequencer, here’s a drum sequencer! It runs on socket.io in a collabo with Jeff Ong. It saves the current pattern on a server, and so everyone who accesses the site is modifying the same pattern live, together.

Here’s my github repo for Code of Music.

The first assignment was to make a melody sequencer, and here’s where I’m at with that:

I used p5.js and p5.sound. I created an array of Oscillators and Envelopes in order to make it polyphonic. Right now the noteArray is just 6 notes of a pentatonic scale, which always sounds great. But it can be modified or extended—in the long run I’d like to be able to tweak the scale on the fly, including expand/shrinking it. Same goes for the wDiv variable which represents how many beat divisions there are in this little loop. I need to tweak the mapping of block to mouse position to make sure that the blocks appear where the user clicks. This probably means switching to p5’s rectMode(CENTER).

I developed the p5.sound library for Google Summer of Code, but didn’t get as deep into musical timing and synthesis as I would like. I’d like to keep refining it throughout this class, and already made a couple improvements in the process of making the melody sequencer.

For the first day of Code of Music, we paired up, found online sequencers, tinkered with them, and then reimagined them after drawing two oblique strategy cards.

I was delighted to see Billy playing with this Fruity Loops emulator.

I <3 FRUITY LOOPS

Like so many others around the world, I acquired a copy of Fruity Loops as a teenager, and it transformed the way I approach music.

I’d previously messed around with Audio Mulch, which I found to be very confusing. I made some music with it, but it wasn’t clear to me where the sound was coming from or what I was doing to shape the sound.

Fruity Loops was the exact opposite. You open it up, hit play, start filling in a grid, and that grid becomes a musical building block.

I came to FL with a bit of a musical background. I took a few piano lessons when I was younger (what I really wanted to do was play the drums, but somebody told my mom that piano is a percussion instrument, too). I also had about a year’s worth of guitar lessons. I had a vague understanding of musical notation, but couldn’t quite sight read on either instrument. I had come up with a few musical ideas, but never thought to transcribe them—musical notation seemed very detached from the music itself.

Fruity Loops taught me to approach music in terms of Pattern Mode and Song Mode. FL always starts with Pattern mode. In Pattern mode, you have rows representing individual drum hits, each with 32 columns to fill. The columns are slightly tinted, and as you start to fill them in, you realize, intuitively, that four columns is equal to one quarter note. In other words, you start with two bars of music. FL could be thought of as a sort of musical notation. But I never really considered any of that. I just wanted to play with patterns.

I think every FL user spends a lot of time in Pattern Mode before they advance to Song Mode. Song Mode is similar to Pattern Mode, but on a larger scale. Your blocks are no longer individual drum hits, but entire 8-bar patterns. In Fruity Loops, a Song is simply a bunch of organized Patterns.

I started to use Fruity Loops with friends. We experimented with different ways to shape the sounds. We imported our own samples recorded with a PC microphone. My friend Justin had more traditional music training than I did, and he inspired me to start using the Piano Roll to experiment with melody and harmony. Together, we tried to recreate pop songs like Aaliyah’s “Are You That Somebody” and I think we came pretty close. As I became more serious about music in general, I kept coming back to Fruity Loops as a reference point. For example, I took a Ghanaian drumming class in college, which sent polyrhythms floating around my head, and I tried to transcribe these ideas in Fruity Loops. Interlocking rhythms challenged the Fruity Loops view of music as starting on the one, but I figured out ways to make it work.

Though I haven’t made any music with Fruity Loops in nearly a decade, it’s been very influential on my conception of music, both as a listener and as a composer. It helped me break musical structure and rhythm into bite sized blocks. And Fruity Loops’ views (pattern mode, song mode, and piano roll) correlate with other DAWs.

Fruity Loops Re-Imagined

Billy and I drew two oblique strategies:

- overtly/openly resist change

- once the search has begun, something will be found

We attempted to apply these to the online FL emulator. Billy had never used FL before, and the online FL emulator actually falls short of recreating some of the most intuitive pieces of Fruity Loops. For one thing, it doesn’t load with any sounds- you have to drag them in.

Our sequencer would have preset sounds, openly resisting change so that if users want to bring new sounds in, they can search for sounds easily, and “once the search has begun, something (good) will be found.”

Some features didn’t seem very necessary, like one of the first parameters Billy started tweaking was the panning knob. To me, Fruity Loops is all about creating rhythms, and the mix (volume / panning etc) comes, so maybe that should not be a feature that can be changed as immediately, resisting change until you have a reason to change it.

One of the things I love about FL is the way beats are divided up so you can sense where the beats are without knowing anything about music theory, time signatures or the definition of a “quarter note.” It might be interesting if our sequencer had a metronome to help guide the beat to fall in the right place to start. I think this could be a nice constraint especially for new users, to have some pattern pre-loaded.

With melody, FL has a piano roll which can be intimidating. I would like to treat melodic voices more freely, without needing to set every note (and figure out some music theory in the process). I’m inspired by the app Figure which lets you set the number of notes in a scale and some aspects about it, and I think having a preset scale of notes available at first might be a fun way to guide users.

My design approach definitely leans towards helping people make music whether or not they consider themselves “musicians,” and I think that these types of constraints can also be inspiring for anyone, regardless of their musical background. To me, the oblique strategy of resisting change means limiting options at first, but it is still open to change down the line. Most importantly, once the search has begun—i.e. once the user has an idea of something they want to do, whether it’s a more complex melody or a panning/mixing idea—that option needs to be easily findable.