Here’s my github repo for Code of Music.

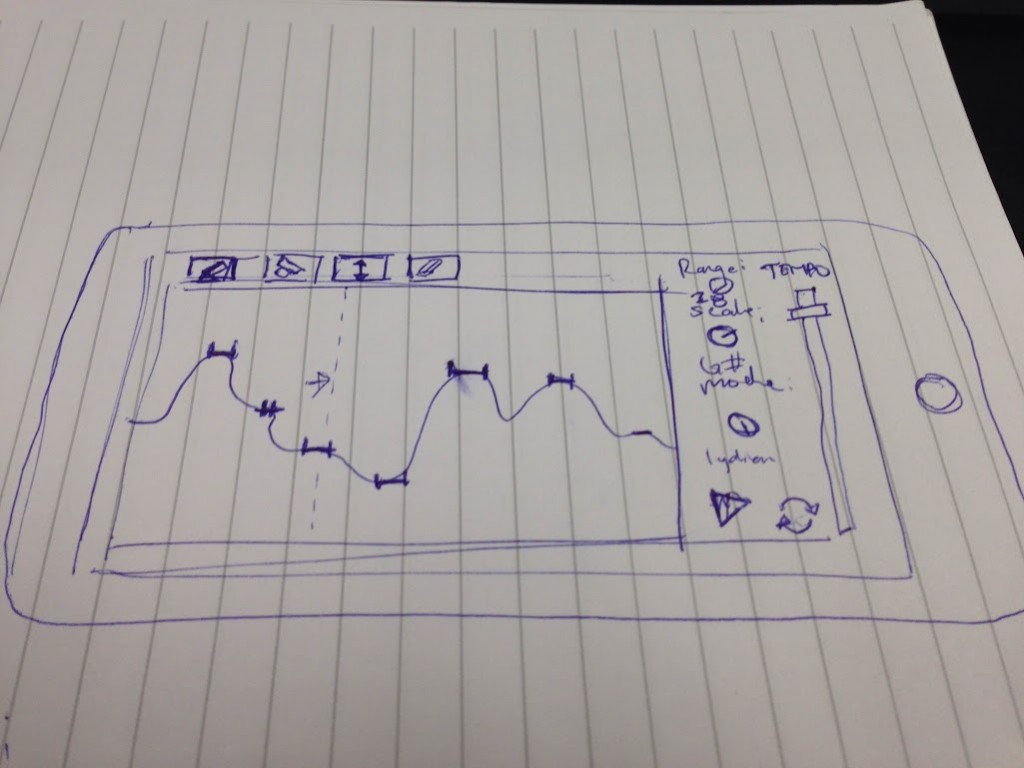

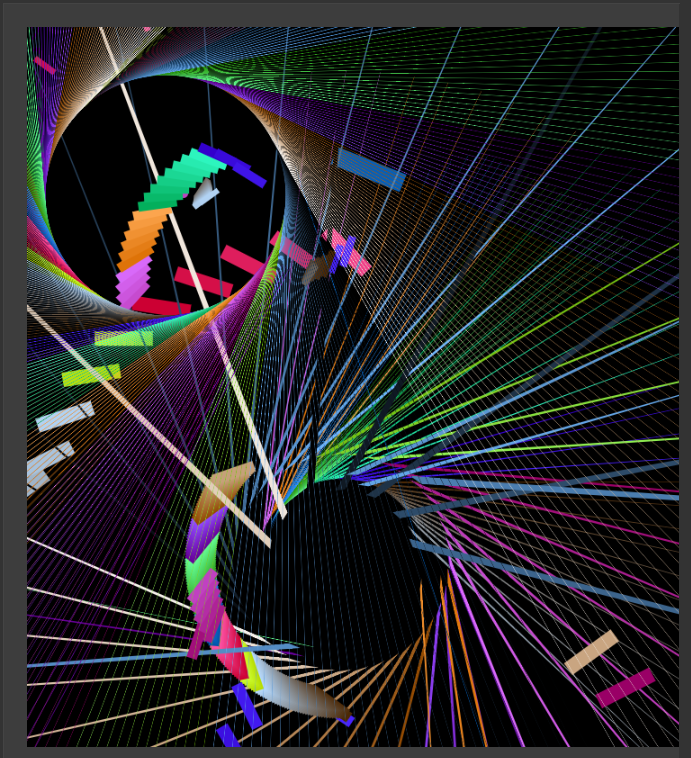

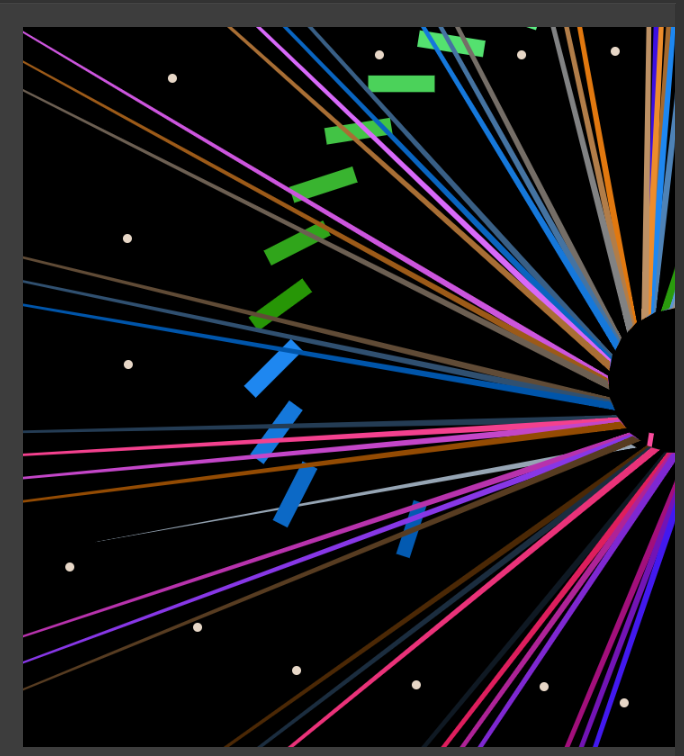

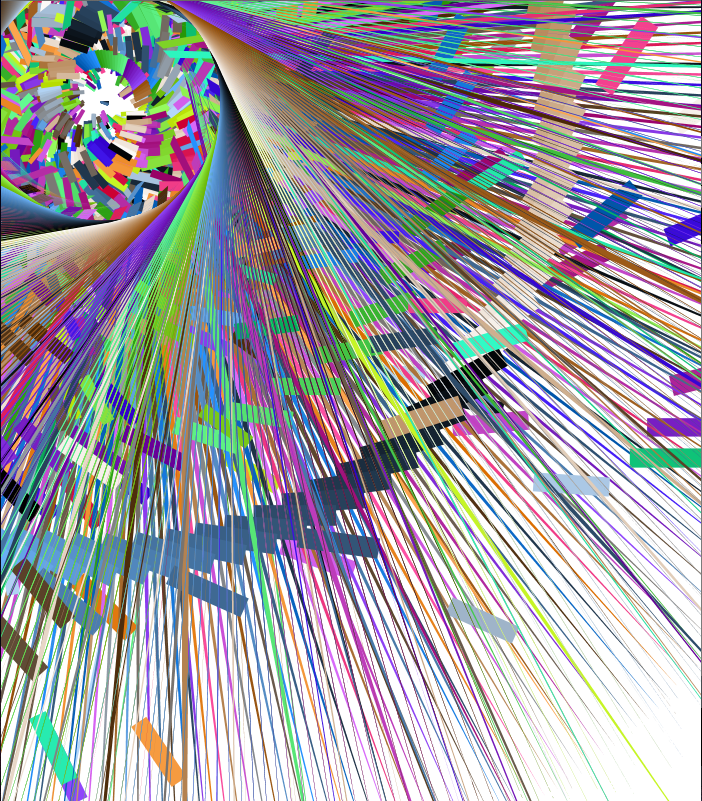

The first assignment was to make a melody sequencer, and here’s where I’m at with that:

I used p5.js and p5.sound. I created an array of Oscillators and Envelopes in order to make it polyphonic. Right now the noteArray is just 6 notes of a pentatonic scale, which always sounds great. But it can be modified or extended—in the long run I’d like to be able to tweak the scale on the fly, including expand/shrinking it. Same goes for the wDiv variable which represents how many beat divisions there are in this little loop. I need to tweak the mapping of block to mouse position to make sure that the blocks appear where the user clicks. This probably means switching to p5’s rectMode(CENTER).

I developed the p5.sound library for Google Summer of Code, but didn’t get as deep into musical timing and synthesis as I would like. I’d like to keep refining it throughout this class, and already made a couple improvements in the process of making the melody sequencer.